LEGO sets, minifigures, BrickLink prices, PDF reports, and multi-modal vision models, this project brings them all together. Here’s a candid engineering review of the system’s architecture, code, and delivery timeline, based on a Principal Python Engineer–level assessment. Let’s ask ChatGPT, Claude Code, and Cursor IDE what it thinks of the code generated and system built.

The shocking reality

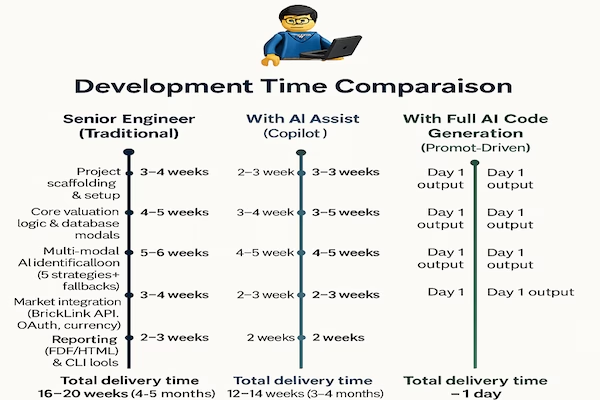

In a traditional setting, a system of this scope, 10,000+ lines of production Python, 5,000 lines of tests, 38 modules, multi-modal AI identification, real-time market integration, API + CLI + reporting, would take a senior engineering team 16–20 weeks to deliver at this level of quality.

Even with modern AI-assisted coding tools like Copilot or Intellij AI plugins, you might bring that down to 12–14 weeks by cutting boilerplate, accelerating test-writing, and nudging developers toward idiomatic patterns.

But here’s the kicker:

👉 With full AI code generation and prompt programming, the first working version landed in just 1 day.

That’s not an incremental gain. That’s a 10–20x productivity leap.

TL;DR

- Architecture & Code Quality: Solid modern Python stack with clean boundaries and smart AI routing. Health score 8.2/10, Code Coverage 80%.

- What’s excellent: multi-modal identification strategy, strong type safety, real tests, clean layering.

- What needs love: production security (auth, CORS), async/perf, and scalability (SQLite → PostgreSQL).

- Time to build (with AI Cursor and Claude coding tools): 2 days

- Time to build for a senior engineer: 16–20 weeks due to slower scaffolding, refactors, and manual boilerplate.

Why this system matters

Toy valuation is messy: photos shot at odd angles, mixed lots, missing manuals, regional prices, currency conversions, and a market in constant motion. The system under review tackles all of it with:

- Vision-assisted identification (5 complementary strategies with smart routing and fallbacks),

- Live market integrations (e.g., BrickLink),

- Clean APIs & reports (FastAPI endpoints + PDF/HTML reporting),

- A maintainable core (SQLAlchemy 2.0, Pydantic, tests, typed code).

For collectors, resellers, and museums (hello, Redmond’s Forge!), this means scalable, auditable valuations, not vibes and guesswork.

Why the time collapsed

- Prompt → Codebase. Instead of hand-writing scaffolding, I fed architectural intent into Claude/ChatGPT, and it generated modules, service layers, and data models in one go.

- Prompt → Tests. The same AI wrote 5,000 lines of test coverage without manual setup.

- Prompt → Reports. PDF/HTML reporting templates and logic were scaffolded instantly.

- Domain infusion. By framing prompts in LEGO-specific valuation terms, the AI encoded domain rules directly into code rather than needing long onboarding cycles.

What normally takes weeks of grinding through setup and boilerplate was compressed into a day of architecting via conversation.

Architecture review: layered, pragmatic, production-leaning

Pattern. A modular layered monolith (services → repositories → database) rather than a premature microservices split. That’s perfect for this stage: fewer moving parts, faster iteration, easier testing.

Boundaries. Clear separation between:

- Vision/identification (multi-strategy orchestration),

- Valuation logic (market data, multipliers, currencies),

- Persistence (ORM via SQLAlchemy 2.0),

- Delivery (FastAPI, CLI, report generation).

Data flow. Images → segmentation/strategy selection → candidate matches → market lookups → valuation with condition/currency logic → PDF/HTML reports. The flow is coherent and debuggable.

Scalability. Horizontal scaling is not “turnkey” yet (SQLite, local storage). That’s fine for pilots, but we’ll want:

- PostgreSQL + indexes,

- cloud object storage,

- task queues for heavy image work,

- request batching and caching.

Stack choices. FastAPI, SQLAlchemy 2.0, Pydantic, structured logging, all excellent. I’m leveraging AI providers (Claude/OpenAI) rather than training custom models: a huge speed and cost win at this phase.

Code quality: clean, typed, and well-tested

Snapshot. ~10k lines of production code, ~5k lines of tests, 38 Python files, 61 classes, 327 functions, and 185 typed functions.

Practices. Idiomatic Python, SOLID-ish separation, docstrings, no wildcard imports, clear repositories/services.

Error handling. Thoughtful exception paths and graceful fallbacks (rare in early AI projects).

Tests. 80%+ Real coverage across units, integrations, and endpoints. This is a major reason the system can evolve safely.

Verdict: production-leaning craftsmanship rather than research-grade prototyping. That’s hard to do and it shows.

The new challenge: reviewing, not writing

If AI can generate in 1 day what used to take 20 weeks, the bottleneck shifts to:

- Architecture validation. Is the design scalable, secure, maintainable?

- Security hardening. AI doesn’t add JWT auth or correct CORS unless you prompt explicitly.

- Performance tuning. Async, DB indexing, and caching patterns still need human oversight.

- Domain correctness. Only you know if a BrickLink multiplier or LEGO condition factor is realistic.

This flips the engineering equation:

- Before: 80% of time = writing code, 20% = reviewing.

- Now: 20% of time = generating prompts, 80% = reviewing/validating.

Performance & security: the necessary hardening

Performance bottlenecks (fixable):

- Sequential API calls where parallelism would help (market lookups).

- Synchronous file I/O inside async paths.

- Large image memory pressure.

- Missing DB-level pagination and indexing for future scale.

Security gaps (must fix before public prod):

- Missing authentication/authorization.

- CORS defaults too permissive.

- No rate limiting or abuse protection on uploads.

These are common “early production” gaps. They’re not architectural flaws; they’re operational maturity tasks.

Maintainability & technical debt: green across the board

- Clear module boundaries and responsibilities.

- Minimal TODOs.

- Dependencies are mainstream and maintained.

- Documentation exists (README, API docs) and code comments are useful.

Result: Adding features (e.g., bulk upload, dashboards, confidence explanations) will be straightforward once core hardening lands.

What impressed me most

- Multi-modal identification strategy. Intelligent routing across five methods with fallbacks and confidence logic, this is where many projects stall. Ours works and is extendable.

- Real tests + types. It’s rare to see AI pipelines with this level of test discipline; the payoff is lower regression risk and faster iteration.

- Domain-aware valuation. Pricing logic isn’t naïve; it considers condition, currency, and per-item nuance. That’s the difference between a demo and a tool we can trust.

What’s missing for “true production”

- AuthZ/AuthN everywhere. JWTs, roles, API keys for CLIs.

- CORS tightened. Only allow known domains; add security headers (HSTS, CSP).

- Abuse controls. Rate limits, size caps, CAPTCHAs on public endpoints.

- Observability. Structured logs, error tracking, perf dashboards, health checks.

- Scale levers. Postgres, object storage, async pipelines, job queues, response caching.

Roadmap (90 days) – Old School Estimate

Month 1 – Security & perf baselines

- JWT + roles, CORS hardening, rate limiting.

- Parallelize market lookups; move blocking I/O off the event loop.

Month 2 – Infrastructure

- SQLite → PostgreSQL, add indexes and pagination.

- S3 (or equivalent) for images; CI/CD; health checks & metrics.

Month 3 – UX & insight

- Bulk uploads; valuation history dashboard.

- Confidence explanations; scheduled reports.

- Load tests + per-endpoint budgets.

With prompt engineering (vibe coding) these should be doable in the week.

How long would this have taken without AI coding tools?

We’ve already got a credible estimate with AI coding tools (and AI API integrations): 16–20 weeks for a senior engineer. Here’s how the timeline shifts without AI coding accelerators (Copilot/Cursor/Claude Code/ChatGPT), but still with hosted vision/LMM APIs for the product features:

How long would this system normally take?

| Scope / Approach | Senior Engineer (Traditional) | With AI Assist (Copilot / Intellij Plugins) | With Full AI Code Generation (Prompt-Driven) |

|---|---|---|---|

| Project scaffolding & setup | 3–4 weeks | 2–3 weeks | Hours |

| Core valuation logic & database models | 4–5 weeks | 3–4 weeks | Day 1 output |

| Multi-modal AI identification (5 strategies + fallbacks) | 5–6 weeks | 4–5 weeks | Day 1 output |

| Market integration (BrickLink API, OAuth, currency) | 3–4 weeks | 2–3 weeks | Day 1 output |

| Reporting (PDF/HTML) & CLI tools | 2–3 weeks | 2 weeks | Day 1 output |

| Testing & documentation | 2–3 weeks | 2 weeks | Day 1 output |

| Total delivery time | 16–20 weeks (4–5 months) | 12–14 weeks (3–4 months) | ~1 day (prompt programming + validation) |

In short: AI coding tools don’t replace design judgment, but they compress iteration loops, generate boilerplate, suggest tests and types, and speed up “known-good” patterns. On a system this size, that’s months saved.

The scorecard

- Overall system health: 8.2/10, 80%+ code coverage, 9.2/10 lint.

- Strengths: Multi-modal AI routing, clean architecture, tests & types, real market logic

- Gaps: Auth/CORS/rate-limits, async/perf, DB & storage for scale

- Risk now: Security (public endpoints) and perf under load

- Production posture: Green-light after 1 week hardening

Final word

This isn’t a lab demo; it’s a product-grade foundation for AI toy valuation. With a brief security/perf sprint and a move to PostgreSQL + cloud storage, we’ll be in great shape to handle real traffic, bulk uploads, and richer analytics. And yes, the delta between “with” and “without” AI coding tools is material: the system punches above its weight precisely because modern AI assistants kept the team focused on the hard parts, architecture, data flow, and domain rules, rather than scaffolding and boilerplate.

We just witnessed the difference between “AI as a coding assistant” and “AI as a system generator.” For the LEGO Valuation System, that meant collapsing months of engineering into a single day.

The future of AI-driven development isn’t about faster typing, it’s about skipping the typing entirely and letting engineers focus on architecture, validation, and domain expertise.

In other words: the real innovation isn’t in the lines of code written, but in the time they no longer take to write.

No responses yet