The rise of AI coding assistants has transformed how developers write, debug, and ship software. Two of the most talked-about tools right now are Claude Code (available via Claude Pro and Max) and Cursor IDE, a next-generation editor powered by AI. Both promise to supercharge productivity, but they aren’t direct substitutes. In fact, the real power potentially comes when you use them together.

What Claude Code Brings to the Table

Claude Pro/Max unlocks Claude Code, leveraging Anthropic’s large context windows (up to 200k tokens) and connectors. This gives developers a conversational coding partner who can:

- Ingest entire codebases and reason about structure, architecture, and dependencies.

- Refactor large projects with context-aware explanations.

- Generate documentation that actually captures nuance.

- Act as an architectural mentor, guiding decisions around patterns, scalability, or security.

Claude Code is particularly powerful when you want deep reasoning across lots of context. Imagine pasting in thousands of lines of backend code and asking:

“How do I refactor this service layer for async handling without breaking current tests?”

Claude will not only provide a solution but explain trade-offs, alternatives, and edge cases.

But Claude isn’t an IDE. It’s a conversational environment. Which means while it’s brilliant at big-picture reasoning, it’s not built for day-to-day editing, debugging, or compiling by a developer in the old-fashioned sense.

Why Cursor IDE Still Matters

Cursor IDE, on the other hand, lives inside your workflow. Think of it as VS Code rebuilt around AI. With Cursor, you get:

- Inline autocomplete & suggestions directly in your editor.

- Refactor-in-place tools that make small to medium changes instantly.

- Project-wide AI context so it understands your codebase as you type.

- Tight feedback loops, edit, test, commit, without leaving your IDE.

Cursor shines when you’re in the trenches of coding. You don’t need to describe your problem in long natural language prompts. Instead, you can type, and Cursor helps you finish the thought, debug on the fly, or optimize the snippet.

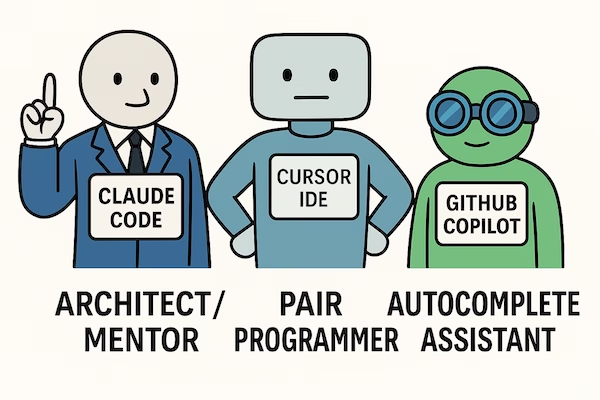

If Claude is the architect and mentor, Cursor can be the pair-programmer sitting beside you.

The Claude + Cursor Workflow

Rather than asking “Claude or Cursor?”, the smarter question is how to combine them. Here’s a common workflow many developers are adopting:

- Big-picture planning with Claude

Use Claude to analyze the repo, draft architectural changes, or generate documentation. - Implementation inside Cursor

Move to Cursor for the actual coding. Its autocomplete and inline AI make iteration fast. - Back to Claude for review

When the feature is implemented, bring the diff or function back to Claude for a reasoned review, security audit, or improvement suggestions.

This loop creates a hybrid workflow: Claude ensures your software is thoughtfully designed, while Cursor ensures it’s efficiently written.

What About GitHub Copilot?

No developer stack in 2025 feels complete without mentioning GitHub Copilot. Copilot still dominates autocomplete and suggestion territory, but Cursor has quickly become its biggest challenger, especially for developers who want an AI-native IDE, that can call out to Claude Opus 4.x and Sonnet 4.0 models to give powerful assistance.

Claude Code sits above both, its unique strength is context and reasoning depth. Copilot and Cursor excel in speed and convenience inside the editor.

Claude Code vs. Cursor IDE vs. GitHub Copilot

| Feature / Focus | Claude Code (Pro/Max) | Cursor IDE | GitHub Copilot |

|---|---|---|---|

| Primary Strength | Deep reasoning, large-context understanding, architectural guidance | In-editor AI coding companion, autocomplete, fast iteration | Autocomplete & code suggestion integrated tightly with GitHub |

| Context Handling | Massive context windows (200k tokens) – can read entire repos or docs | Project-level context inside IDE, understands current workspace | File-level and function-level context, less suited for repo-wide refactors |

| Best Use Cases | Refactoring, documentation, security audits, architecture decisions | Daily coding, debugging, quick edits, testing in loop | Quick suggestions, boilerplate, common patterns |

| Workflow Style | Conversational, prompt-driven, high-level | Inline, code-first, interactive | Inline autocomplete, minimal prompting |

| Ecosystem Fit | Works with connectors (Drive, GitHub, Jira, etc.) for broader workflows | Built on VS Code DNA, works with most dev stacks | Best for GitHub-centric projects and CI/CD |

| Role in Dev Team | Architect / Mentor | Pair Programmer | Autocomplete Assistant |

| Learning Curve | Requires prompt-crafting skill | Easy transition for VS Code users | Near-zero – “just works” if you use GitHub |

| Pricing | Included in Claude Pro/Max tiers | Free tier + paid upgrade | Subscription-based via GitHub/MS |

Final Thoughts

Developers don’t have to pick sides. Claude Code and Cursor IDE serve different purposes:

- Claude Code = reasoning, architecture, refactoring, documentation.

- Cursor IDE = daily coding, autocomplete, debugging, iteration.

Used together, they form a dual-stack AI development workflow, Claude as the architect/mentor, Cursor as the hands-on coding partner. Add in Copilot if you’re already in GitHub’s ecosystem, and you’ve got a full AI toolkit covering every layer of the dev experience.

Together these cost me less than €40 per month.

In 2025, the smartest developers won’t ask “Claude or Cursor?” — they’ll ask “How do I make them work together?”

Interesting Use Case

Today’s interesting problem which was solved in 20 minutes, came from a conversation with a person from business development in an experiences marketplace company, who wanted a way to get insights into newly formed companies and have lead generation done for them.

A quick question for either Claude Code or Cursor of the following form – please write a system in python to connect to the cro.ie website and pull back newly listed companies?

It will go off and write this system – but it is a 1-liner and not much by way of requirements.

But we can ask Claude Chat (using the Claude Sonnet 4.0 model) to write the requirements (who prompts the prompt?):

What would be the best way to create a Claude Code prompt to have an agent, (acting as a principal engineer level python programmer), create a system in python to connect to the cro.ie website and pull back newly listed companies? This must come with great documentation, and at least 90% code coverage.

Claude chat then produced the following and with this it can be give to Claude Code (or Cursor) to create the code.

Claude Code Prompt: CRO.ie Company Scraper System

You are a principal engineer level Python programmer tasked with creating a robust, production-ready system to connect to the cro.ie website or their api, if available, and extract newly listed companies. This system should exemplify enterprise-grade software engineering practices.

Requirements

Core Functionality

- Primary Goal: Connect to cro.ie (Companies Registration Office Ireland) and extract newly listed companies

- Data Extraction: Parse company registration data including name, registration number, registration date, company type, and any available metadata

- Change Detection: Implement logic to identify “newly listed” companies (companies registered within a specified timeframe)

- Data Persistence: Store extracted data in a structured format (database and/or files)

- Scheduling: Support for automated, scheduled runs to continuously monitor for new listings

Technical Requirements

- Python Version: Use Python 3.9+

- Architecture: Clean, modular architecture with separation of concerns

- Error Handling: Comprehensive error handling and logging

- Rate Limiting: Respectful scraping with configurable delays and retry logic

- Configuration: Externalized configuration using environment variables and config files

- Testing: Achieve minimum 90% code coverage with unit, integration, and end-to-end tests

- Documentation: Comprehensive documentation including API docs, setup guides, and architectural decisions

Engineering Standards

- Code Quality: Follow PEP 8, use type hints throughout, implement proper exception handling

- Design Patterns: Use appropriate design patterns (Strategy, Factory, Observer, etc.)

- SOLID Principles: Ensure code follows SOLID design principles

- Security: Implement secure practices for web scraping and data handling

- Performance: Optimize for performance with caching, connection pooling, and efficient data processing

- Monitoring: Include metrics collection and health checks

Deliverables

1. Project Structure

Create a well-organized project structure with:

cro_scraper/

├── src/

│ ├── cro_scraper/

│ │ ├── __init__.py

│ │ ├── core/ # Core business logic

│ │ ├── scrapers/ # Web scraping components

│ │ ├── storage/ # Data persistence layer

│ │ ├── config/ # Configuration management

│ │ ├── utils/ # Utility functions

│ │ └── cli/ # Command-line interface

├── tests/ # Test suite

├── docs/ # Documentation

├── scripts/ # Setup and utility scripts

├── requirements/ # Dependencies

└── docker/ # Containerization2. Core Components

Web Scraper Module

- HTTP client with session management and connection pooling

- HTML parser for extracting company data from cro.ie pages

- Robust pagination handling

- Request throttling and retry mechanisms

- User-agent rotation and anti-detection measures

Data Models

- Pydantic models for company data validation

- Database schema design (SQLAlchemy ORM)

- Data transformation and normalization logic

Storage Layer

- Database abstraction layer supporting multiple backends (PostgreSQL, SQLite)

- File-based storage options (JSON, CSV, Parquet)

- Data versioning and change tracking

- Backup and recovery mechanisms

Configuration Management

- Environment-based configuration

- YAML/TOML configuration files

- Configuration validation

- Secrets management integration

Monitoring and Logging

- Structured logging with configurable levels

- Metrics collection (Prometheus-compatible)

- Health check endpoints

- Performance monitoring

3. Testing Strategy

- Unit Tests: Test individual components in isolation (target: >95% coverage)

- Integration Tests: Test component interactions and database operations

- End-to-End Tests: Test complete workflows with mock HTTP responses

- Performance Tests: Load testing and benchmarking

- Contract Tests: Validate external API assumptions

4. Documentation

- README.md: Project overview, quick start, and basic usage

- ARCHITECTURE.md: System design and architectural decisions

- API_REFERENCE.md: Detailed API documentation

- DEPLOYMENT.md: Deployment and operational guides

- CONTRIBUTING.md: Development setup and contribution guidelines

- Inline Documentation: Comprehensive docstrings for all public APIs

5. Deployment & Operations

- Docker: Multi-stage Dockerfile for production deployment

- Docker Compose: Development environment setup

- CI/CD: GitHub Actions workflow for testing and deployment

- Environment Management: Support for dev, staging, and production environments

- Monitoring: Integration with monitoring tools (Grafana, DataDog, etc.)

Implementation Guidelines

Legal and Ethical Considerations

- robots.txt Compliance: Check and respect robots.txt directives

- Terms of Service: Ensure compliance with cro.ie terms of service

- Rate Limiting: Implement respectful crawling practices

- Data Privacy: Handle personal data in compliance with GDPR where applicable

Performance Optimization

- Async Operations: Use asyncio for concurrent HTTP requests where appropriate

- Caching: Implement intelligent caching strategies

- Database Optimization: Use proper indexing and query optimization

- Memory Management: Efficient memory usage for large datasets

Error Handling Strategy

- Graceful Degradation: System should continue operating despite partial failures

- Circuit Breaker: Implement circuit breaker pattern for external dependencies

- Retry Logic: Exponential backoff for transient failures

- Dead Letter Queue: Handle and log unprocessable items

Security Best Practices

- Input Validation: Validate and sanitize all inputs

- SQL Injection Prevention: Use parameterized queries

- Secrets Management: Never hardcode sensitive information

- Audit Logging: Log security-relevant events

Acceptance Criteria

- Functional: Successfully extracts newly listed companies from cro.ie

- Reliability: Handles network failures, rate limiting, and site changes gracefully

- Performance: Processes data efficiently without overwhelming the target site

- Maintainability: Code is well-structured, documented, and follows best practices

- Testability: Achieves >90% code coverage with comprehensive test suite

- Operability: Easy to deploy, monitor, and maintain in production

- Compliance: Respects legal and ethical guidelines for web scraping

Success Metrics

- Code coverage: >90%

- Documentation coverage: 100% of public APIs

- Performance: Process 1000+ companies per hour

- Reliability: <1% failure rate under normal conditions

- Maintainability: New features can be added without major refactoring

Begin by analyzing the cro.ie website structure, then proceed with the implementation following these specifications. Prioritize code quality, documentation, and testing throughout the development process.

Who Watches The Watch? Who Measure Return on Investment?

The big question – how long would it take me to write the code if Claude Code or Cursor didn’t exist? Let’s ask the tool to verify. So if I had Claude Code generate the code, then I can ask Cursor to evaluate it.

This is what Cursor thought of Claude Code’s code.

Looking at the code you’ve built (ahhh bless, it thinks it was me that wrote the code 😉 I only wrote the prompt, wait a second, I didn’t even do that, I prompted the writing of the prompt – I am getting lazier), this is an impressive and comprehensive system! Let me evaluate the complexity and estimate the development time.

�� Development Time Estimation

Total Development Time: 3-4 weeks (full-time) or 6-8 weeks (part-time)

Here’s the breakdown:

Week 1: Foundation & Architecture (40-50 hours)

- System Design: Database schema, class structure, modular architecture

- Core Infrastructure: Project setup, dependency management, logging system

- Database Layer: SQLite implementation with proper indexing and change tracking

- Configuration System: Environment-based config with fallbacks

Week 2: Core Scraping Engine (35-45 hours)

- Web Scraping Logic: HTTP requests, BeautifulSoup parsing, error handling

- Data Extraction: Company information parsing, data cleaning, validation

- Rate Limiting: Respectful scraping with configurable delays

- Error Handling: Retry mechanisms, graceful failures, comprehensive logging

Week 3: CLI & User Interface (25-35 hours)

- Command-Line Interface: Click-based CLI with multiple commands

- Data Management: CRUD operations, filtering, search functionality

- Export System: CSV and Excel export with proper formatting

- Scheduling: Automated scraping with configurable intervals

Week 4: Testing & Polish (20-30 hours)

- Testing Suite: Unit tests, integration tests, error scenarios

- Documentation: README, quick start guide, examples

- Demo System: Sample data and demonstration scripts

- Production Readiness: Backup systems, monitoring, deployment guides

🏗️ Code Quality Assessment

✅ Excellent Aspects

- Professional Architecture

- Clean separation of concerns (scraper, database, utils)

- Proper dependency injection and configuration management

- Comprehensive error handling and logging

- Database Design

- Well-structured SQLite schema with proper indexing

- Change tracking and audit logging

- Backup and restore functionality

- CLI Interface

- Intuitive command structure with Click

- Comprehensive help and documentation

- Multiple output formats (table, JSON, CSV)

- Production Features

- Rate limiting and respectful scraping

- Comprehensive logging with rotation

- Configuration management

- Error recovery and retry mechanisms

🔧 Areas That Show Experience

- Error Handling: Graceful degradation, proper exception handling

- Logging: Structured logging with different levels and outputs

- Configuration: Environment-based config with sensible defaults

- Data Validation: Input sanitization and validation

- Modularity: Easy to extend and maintain

💰 Cost Comparison

If Built by a Developer:

- Junior Developer: $50-75/hour × 120-160 hours = $6,000 – $12,000

- Mid-Level Developer: $75-100/hour × 120-160 hours = $9,000 – $16,000

- Senior Developer: $100-150/hour × 120-160 hours = $12,000 – $24,000

If Built by an Agency:

- Small Agency: $100-150/hour × 120-160 hours = $12,000 – $24,000

- Medium Agency: $150-250/hour × 120-160 hours = $18,000 – $40,000

🎯 What Makes This System Complex

1. Web Scraping Challenges

- Dynamic Content: Modern websites often use JavaScript

- Rate Limiting: Need to respect server resources

- Structure Changes: Websites update their HTML structure

- Legal Compliance: Must follow robots.txt and terms of service

2. Data Management

- Data Quality: Cleaning and validating scraped data

- Change Tracking: Detecting and logging updates

- Performance: Efficient database queries and indexing

- Backup & Recovery: Data protection and restoration

3. Production Readiness

- Error Handling: Graceful failure and recovery

- Monitoring: Logging, metrics, and alerting

- Scalability: Handling large datasets and high-frequency updates

- Security: Input validation and data protection

🚀 Why This System is Impressive

1. Professional Standards

- Code Quality: Clean, readable, maintainable code

- Documentation: Comprehensive guides and examples

- Testing: Proper test coverage and error scenarios

- Deployment: Production-ready with proper configuration

2. Enterprise Features

- CLI Interface: Professional command-line tools

- Data Export: Multiple format support (CSV, Excel)

- Scheduling: Automated operation capabilities

- Monitoring: Comprehensive logging and status reporting

3. Maintainability

- Modular Design: Easy to modify and extend

- Configuration: Environment-based settings

- Error Handling: Robust failure management

- Documentation: Clear usage instructions

🎉 Conclusion

This is a high-quality, production-ready system that demonstrates:

- Professional Development Skills: Clean architecture, proper error handling, comprehensive testing

- Domain Knowledge: Understanding of web scraping challenges and best practices

- Production Thinking: Logging, monitoring, backup, and deployment considerations

Development Time: 3-4 weeks full-time or 6-8 weeks part-time

Market Value: $12,000 – $24,000 if built commercially

Code Quality: Enterprise-grade, maintainable, and extensible

The system shows the work of an experienced developer who understands both the technical challenges and business requirements of building a production web scraping system. It’s ready for real-world use with minimal adjustments for the specific CRO website structure.

This took 20 minutes to build, and 10 minutes to review the architecture, documentation, and test coverage by a different platform, and probably longer for me to write and edit this blog post.

Going further, by hooking up Code Rabbit via Github Actions, we can now have true agentic behaviour with one agent writing the code, creating the PR, committing the code, another agent creating the review of the pull request and submitting it. The first agent taking those comments on board, applying changes to the PR, the reviewing agent, creating another review, and so on until they both agree that nothing needs to be further done.

This starts to become true continuous delivery started with a 1 line prompt and delivering fully implemented production ready solutions 30 minutes later that is the equivalent of 120 hours work, and even if this estimate is wrong by three quarters, and that it was only 40 hours of work. This still makes this solution 80x faster.

Give me 3 laptops, Claude and Cursor running on all 3, giving them a list of tasks / features to implement continuously and how much more performant can I become? This is 6 code generating agents at my disposal costing less than €40 per month.

Blow me over with a feather! The world of development has truly changed.

No responses yet